Challenge Yourself with the World's Most Realistic SPLK-3003 Test.

Which of the following server roles should be configured for a host which indexes its internal logs locally?

A. Cluster master

B. Indexer

C. Monitoring Console (MC)

D. Search head

Explanation: A host that indexes its internal logs locally should be configured as an indexer. An indexer is a Splunk Enterprise instance that indexes data, transforming raw data into events and placing the results into an index. It also searches the indexed data in response to search requests. Indexers can index their own internal logs, such as _internal, _audit, _introspection, and _metrics, which are useful for monitoring and troubleshooting Splunk Enterprise. Indexers can also forward data to other indexers or third-party systems.

Which of the following is the most efficient search?

A. index=www status=200 uri=/cart/checkout | append [search index = sales] | stats count, sum(revenue) as total_revenue by session_id | table total_revenue session_id

B. (index=www status=200 uri=/cart/checkout) OR (index=sales) | stats count, sum (revenue) as total_revenue by session_id | table total_revenue session_id

C. index=www | append [search index = sales] | stats count, sum(revenue) as total_revenue by session_id | table total_revenue session_id

D. (index=www) OR (index=sales) | search (index=www status=200 uri=/cart/checkout) OR (index=sales) | stats count, sum(revenue) as total_revenue by session_id | table total_revenue session_id

What is the primary driver behind implementing indexer clustering in a customer’s environment?

A. To improve resiliency as the search load increases.

B. To reduce indexing latency.

C. To scale out a Splunk environment to offer higher performance capability.

D. To provide higher availability for buckets of data.

Explanation: The primary driver behind implementing indexer clustering in a customer’s environment is to provide higher availability for buckets of data. Indexer clustering is a feature of Splunk Enterprise that allows a group of indexers to replicate each other’s data, so that the system keeps multiple copies of all data. This process is known as index replication. By maintaining multiple, identical copies of Splunk Enterprise data, clusters prevent data loss while promoting data availability for searching. Indexer clustering also provides load balancing and failover capabilities for search and indexing operations. The other options are incorrect because they are not the main reasons for using indexer clustering. Option A is incorrect because indexer clustering does not improve resiliency as the search load increases, but rather as the indexer load increases. Resiliency refers to the ability of the cluster to maintain search and indexing performance under stress or failure conditions. Option B is incorrect because indexer clustering does not reduce indexing latency, but rather increases it slightly due to the overhead of replication. Indexing latency refers to the time it takes for data to be indexed and searchable after ingestion. Option D is incorrect because indexer clustering does not scale out a Splunk environment to offer higher performance capability, but rather scales up a Splunk environment to offer higher availability and resiliency. Scaling out refers to adding more nodes to a distributed system to increase its capacity and throughput, while scaling up refers to adding more resources to existing nodes to increase their performance and reliability.

A customer is using regex to whitelist access logs and secure logs from a web server, but only the access logs are being ingested. Which troubleshooting resource would provide insight into why the secure logs are not being ingested?

A. list monitor

B. oneshot

C. btprobe

D. tailingprocessor

Explanation: The troubleshooting resource that would provide insight into why the secure logs are not being ingested by regex whitelisting is tailingprocessor. The tailingprocessor is a Splunk Enterprise component that monitors files and directories for new data. It also applies filtering rules based on props.conf settings, such as whitelist and blacklist. By using the btool command with the tailingprocessor option, you can see how Splunk Enterprise evaluates the filtering rules for a given file or directory. Therefore, the correct answer is D, tailingprocessor.

A customer has 30 indexers in an indexer cluster configuration and two search heads. They are working on writing SPL search for a particular use-case, but are concerned that it takes too long to run for short time durations. How can the Search Job Inspector capabilities be used to help validate and understand the customer concerns?

A. Search Job Inspector provides statistics to show how much time and the number of events each indexer has processed.

B. Search Job Inspector provides a Search Health Check capability that provides an optimized SPL query the customer should try instead.

C. Search Job Inspector cannot be used to help troubleshoot the slow performing search; customer should review index=_introspection instead.

D. The customer is using the transaction SPL search command, which is known to be slow.

Explanation: Search Job Inspector provides statistics to show how much time and the number of events each indexer has processed. This can help validate and understand the customer’s concerns about the search performance and identify any bottlenecks or issues with the indexer cluster configuration. For example, the Search Job Inspector can show if some indexers are overloaded or underutilized, if there are network latency or bandwidth problems, or if there are errors or warnings during the search execution. The Search Job Inspector can also show how much time each search command takes and how many events are processed by each command.

A customer is migrating their existing Splunk Indexer from an old set of hardware to a new set of indexers. What is the earliest method to migrate the system?

A. 1. Add new indexers to the cluster as peers, in the same site (if needed).

2.Ensure new indexers receive common configuration.

3.Decommission old indexers (one at a time) to allow time for CM to fix/migrate buckets to

new hardware.

4.Remove all the old indexers from the CM’s list.

B. 1. Add new indexers to the cluster as peers, to a new site.

2.Ensure new indexers receive common configuration from the CM.

3.Decommission old indexers (one at a time) to allow time for CM to fix/migrate buckets to

new hardware.

4.Remove all the old indexers from the CM’s list.

C. 1. Add new indexers to the cluster as peers, in the same site.

2.Update the replication factor by +1 to Instruct the cluster to start replicating to new peers.

3.Allow time for CM to fix/migrate buckets to new hardware.

4.Remove all the old indexers from the CM’s list.

D. 1. Add new indexers to the cluster as new site.

2.Update cluster master (CM) server.conf to include the new available site.

3.Allow time for CM to fix/migrate buckets to new hardware.

4.Remove the old indexers from the CM’s list.

Explanation: The correct method to migrate the indexers from an old set of hardware to a new set of indexers is option C. This method ensures that the new indexers are added to the same site as the old indexers, and that the replication factor is increased by one to instruct the cluster to start replicating data to the new peers. This way, the cluster can maintain data availability and integrity during the migration process. After allowing enough time for the cluster master to fix and migrate buckets to the new hardware, the old indexers can be removed from the cluster master’s list.

A new search head cluster is being implemented. Which is the correct command to initialize the deployer node without restarting the search head cluster peers?

A. $SPLUNK_HOME/bin/splunk apply shcluster-bundle

B. $SPLUNK_HOME/bin/splunk apply cluster-bundle

C. $SPLUNK_HOME/bin/splunk apply shcluster-bundle –action stage

D. $SPLUNK_HOME/bin/splunk apply cluster-bundle –action stage

Explanation: To set up the HTTP Event Collector (HEC), each HEC input entry must contain a valid token. A token is a string of alphanumeric characters that acts as an identifier and an authentication code for the HEC input. You can generate a token when you create a new HEC input or use an existing token for multiple inputs. A token is required for sending data to HEC and for managing the HEC input settings.

In which of the following scenarios should base configurations be used to provide consistent, repeatable, and supportable configurations?

A. For non-production environments to keep their configurations in sync.

B. To ensure every customer has exactly the same base settings.

C. To provide settings that do not need to be customized to meet customer requirements.

D. To provide settings that can be customized to meet customer requirements.

Explanation: Base configurations are a set of configuration files that provide consistent, repeatable, and supportable configurations for Splunk deployments. Base configurations are not meant to be a one-size-fits-all solution, but rather a starting point that can be customized to meet customer requirements. Base configurations can help reduce errors, simplify maintenance, and improve performance by providing best practices and common settings for Splunk components. Therefore, the correct answer is D. To provide settings that can be customized to meet customer requirements.

A working search head cluster has been set up and used for 6 months with just the native/local Splunk user authentication method. In order to integrate the search heads with an external Active Directory server using LDAP, which of the following statements represents the most appropriate method to deploy the configuration to the servers?

A. Configure the integration in a base configuration app located in shcluster-apps directory on the search head deployer, then deploy the configuration to the search heads using the splunk apply shcluster- bundle command.

B. Log onto each search using a command line utility. Modify the authentication.conf and authorize.conf files in a base configuration app to configure the integration.

C. Configure the LDAP integration on one Search Head using the Settings > Access Controls > Authentication Method and Settings > Access Controls > Roles Splunk UI menus. The configuration setting will replicate to the other nodes in the search head cluster eliminating the need to do this on the other search heads.

D. On each search head, login and configure the LDAP integration using the Settings > Access Controls > Authentication Method and Settings > Access Controls > Roles Splunk UI menus.

Explanation: The most appropriate method to deploy the LDAP configuration to the search head cluster is to configure the integration in a base configuration app located in shclusterapps directory on the search head deployer, then deploy the configuration to the search heads using the splunk apply shcluster-bundle command. This method ensures that the LDAP settings are distributed to all cluster members in a consistent and efficient way, and that the configuration bundle is validated before deployment. The base configuration app is a special app that contains settings that apply to all cluster members, such as authentication.conf and authorize.conf. The search head deployer is a Splunk Enterprise instance that manages the configuration bundle for the search head cluster and pushes it to the cluster members.

In which of the following scenarios is a subsearch the most appropriate?

A. When joining results from multiple indexes.

B. When dynamically filtering hosts.

C. When filtering indexed fields.

D. When joining multiple large datasets.

Explanation: A subsearch is a search that runs within another search, and provides input

to the outer search. A subsearch is useful when the input to the outer search is not known

in advance, but depends on the results of another search. A subsearch is also useful when

the input to the outer search is too large to be specified manually, but can be generated by

another search. Therefore, a subsearch is the most appropriate in the scenario when

dynamically filtering hosts. For example, if we want to filter the hosts that have a certain

value of a field, we can use a subsearch to find those hosts and pass them to the outer

search. For example:

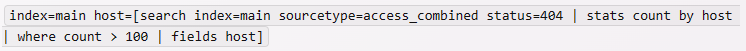

This search will return the events from the main index that have a host value that matches the subsearch.

The subsearch will find the hosts that have more than 100 events with status 404 in the

access_combined sourcetype, and pass them to the outer search as a list of values. This

way, we can dynamically filter the hosts based on another search criterion.

The other scenarios are not as suitable for using a subsearch. When joining results from

multiple indexes, we can use the join command or append command instead of a

subsearch. When filtering indexed fields, we can use the where command or the search

command instead of a subsearch. When joining multiple large datasets, we can use the

map command or the multisearch command instead of a subsearch.

| Page 2 out of 9 Pages |

| Splunk SPLK-3003 Dumps Home |